Summary

This paper proposes a seemly simple but powerful trick that allows us to gain higher accuracy with much deeper neural nets than prior work. The idea is, for each layer, instead of learning the “right” mapping from input x to output F(x), it learns the residual: F(x) - x. It turns out this is much easier to learn and converges faster. The paper proposes a neural net as deep as 152 layers, yet with fewer weights than VGG.

ResNet has the following advantages:

-

On very deep neural nets, it addresses the degradation problem, allowing us to gain accuracy when we add more layers.

-

On not-so-deep neural nets, it converges faster than non-residual networks.

-

It can be straightforwardly implemented in most deep learning frameworks with standard feed-forward and back-prop. No need for special support.

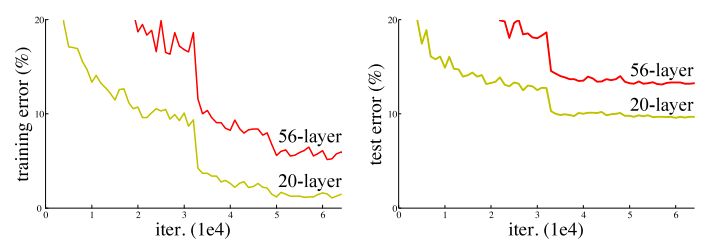

The Counter-intuitive Degradation Problem

It had been believed that the key to gaining better performance is to increase depth. Yet it is observed it may not always help, i.e., increasing depth can hurt accuracy. And it is neither because of over-fitting (training error higher as well), nor because of vanishing gradients (batch normalization used and experimentally verified).

In theory, a deeper network should perform no worse than a shallower one, because we can construct by adding identity layers to the shallow.

Conjecture: multiple non-linear layers have difficulty learning the identity function.

Residual Net

Intuition

-

Due to how neural nets are designed and trained, it is easier to learn near-zeros weights than to learn identity weights.

-

The “optimal” mapping is closer to the identity mapping than to zero mapping (conjecture).

Design

The “basic” design (left): Add a shortcut connection that adds the input to two consecutive layers directly to their output. It must be at least two layers in between otherwise it is equivalent to a single layer.

The “bottleneck” design (right): 1x1 conv layers reduces and restores the dimension, leaving the 3x3 conv layers to process fewer dimensions.

Projection

When F(x) and x have different shapes, there are a few options, including padding zeros or using a project matrix. See details in the paper.

Numbers

-

Even with 152 layers, ResNet-152 still has fewer FLOPs than VGG-16/19 (15.3/19.6 billion).

-

Going from ResNet-34 to ResNet-152 sees considerable benefit (28.54 -> 21.43 error), which is not achievable previously.